Wave-Trainer-Fit: Neural Vocoder with Trainable Prior and Fixed-Point Iteration towards High-Quality Speech Generation from SSL features

Accepted to ICASSP 2026

Authors: Hien Ohnaka, Yuma Shirahata, Masaya Kawamura

[arxiv][code (will be available)]

Table of contents

Background and Motivation

With the development of recent self-supervised learning (SSL) models, generation tasks from SSL features have also achieved success [1, 2]. Neural vocoders from SSL features are crucial components that determine the topline in these tasks. WaveFit [3] is a vocoder that has already achieved success in speech generation tasks from SSL features [1, 2]. This is a fixed-point iteration vocoder that combines GANs with diffusion model-like iterative inference.

However, compared to the waveform generation from Mel-spectrogram, WaveFit from SSL features has two limitations:

- Initial noise sampling

- WaveFit from mel-spectrograms: Well-designed noise sampling [4] is available. This approach is expected to provide the model with an reasonable prior for waveform generation, but this is required spectral envelope information.

- WaveFit from SSL features: Because the spectral envelope cannot be accessed from SSL features, Sampling from a standard normal distribution $\mathcal{N}(0,I)$ was used. Compared to the approach mentioned above, this may compromise performance.

- Gain adjustment

- WaveFit from mel-spectrograms: Following gain adjustment for predicted waveform $z$ is performed using the power $P_z$ of the output and the ground-truth power $P_c$ from the mel-spectrogram: $\mathcal{G}({z}_t,{c})=\sqrt{(P_c/(P_z+s)}{z}_t.$ As a result, the vocoder is freed from the implicit energy estimation task and can focus on essential waveform modeling.

- WaveFit from SSL features: Because ground-truth power cannot be accessed from SSL features, the following reference-free gain adjustment was applied: $\hat{\mathcal{G}}({z}_t)=0.9 \cdot {z}_t/\max(\mathrm{abs}({z}_t)).$ This adjustment compromises the advantages mentioned above.

Our goal is to improve the performance of WaveFit from SSL features by bridging these gaps when compared to WaveFit from mel-spectrogram. To achieve this, we introduce trainable priors inspired by RestoreGrad [5].

Proposed method

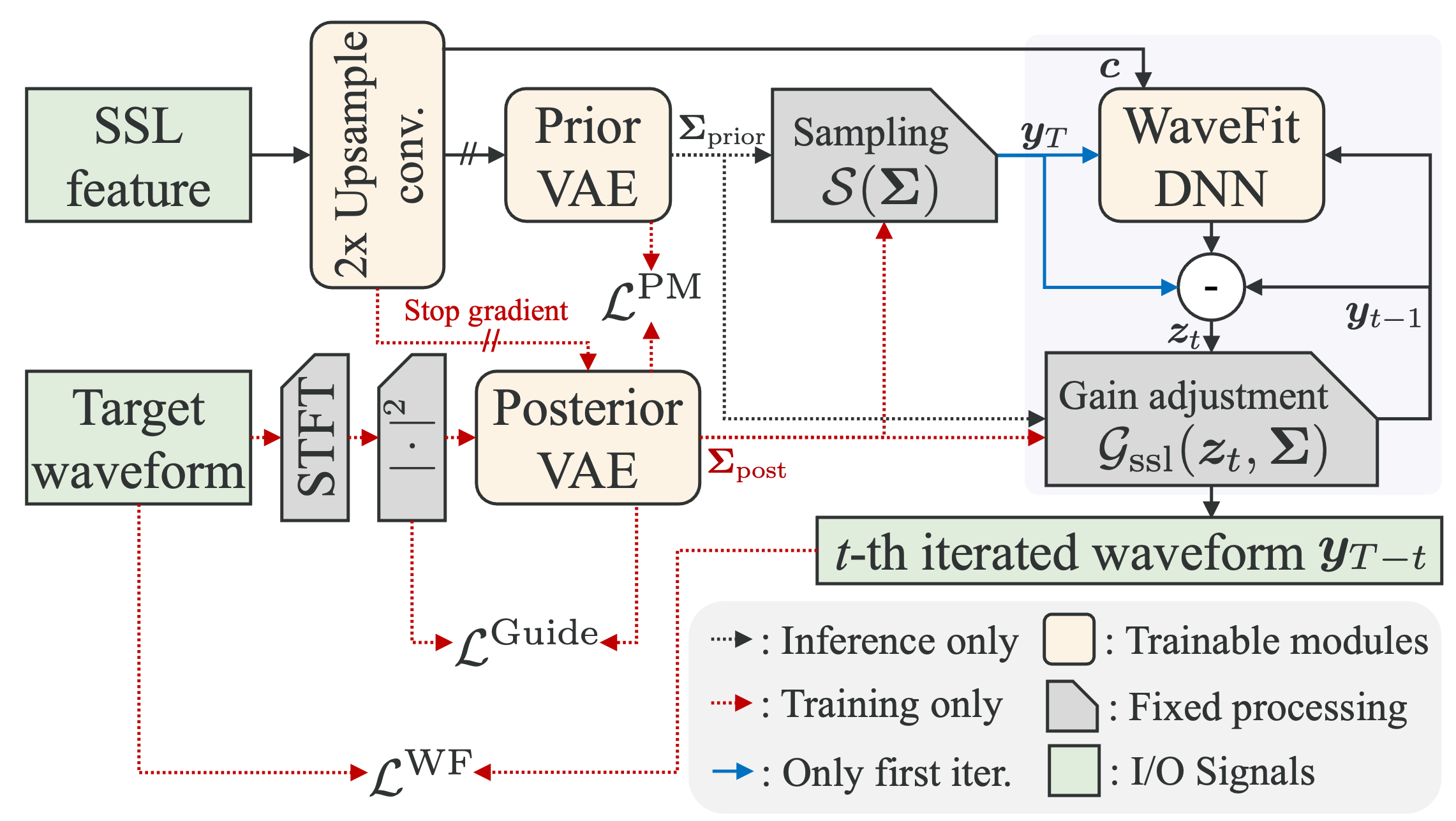

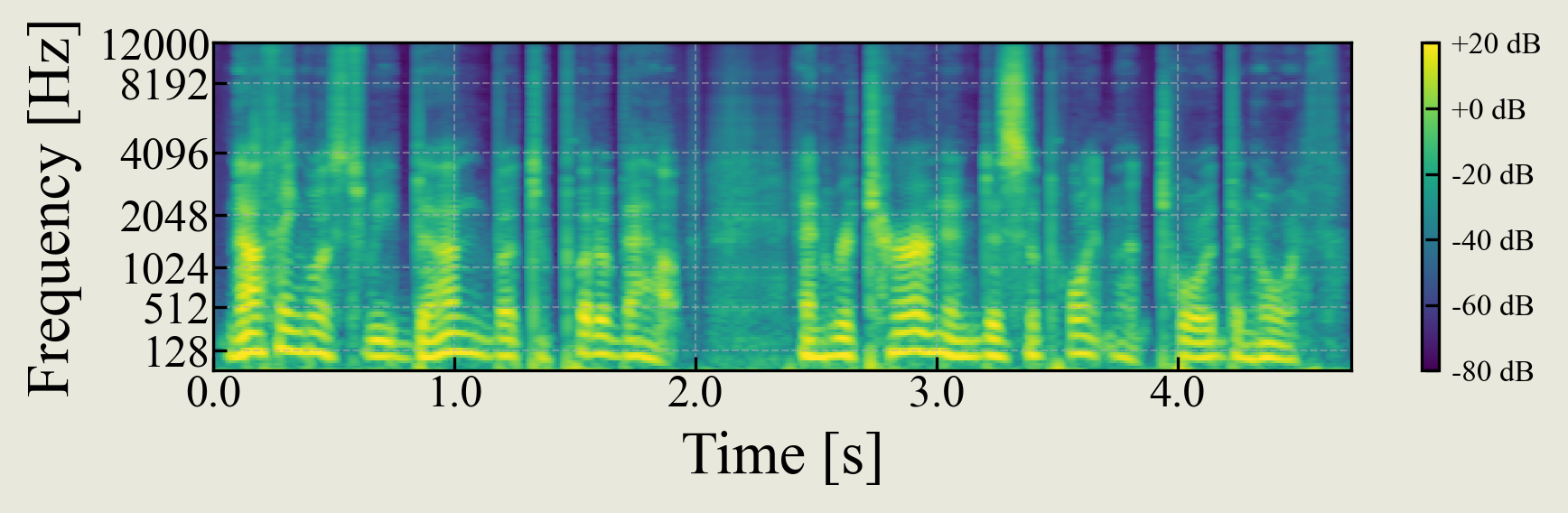

We propose a neural vocoder with trainable prior and fixed-point iteration (WaveTrainerFit) for improved waveform generation from SSL features. First, by introducing variational autoencoder (VAE)-based trainable priors, we achieve sampling of noise $\mathcal{S}(\Sigma)$ close to target waveform. Since inference can start from a point close to speech, high-quality waveform generation with fewer iterations and robustly maintaining speaker characteristics is expected. Furthermore, by imposing constraints on the priors to match the energy of speech, we realize reference-aware gain adjustment $\mathcal{G}_\mathrm{ssl}(z_t, \Sigma)$, which frees the vocoder from the implicit energy inference task. As a result, the model can focus on more important aspects of waveform modeling, and is thought to mitigate the difficulty of training.

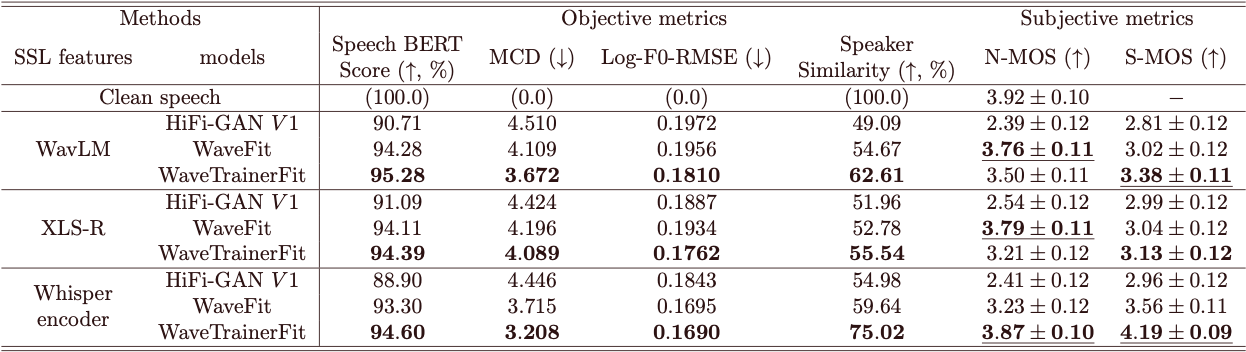

Overall results

We used the LibriTTS-R corpus [6]. For evaluation, we used the speech included in “test-clean”. We used three feature extractors for conditioning features: WavLM [7], XLS-R [8], and Whisper-medium-encoder [9].

Speech samples

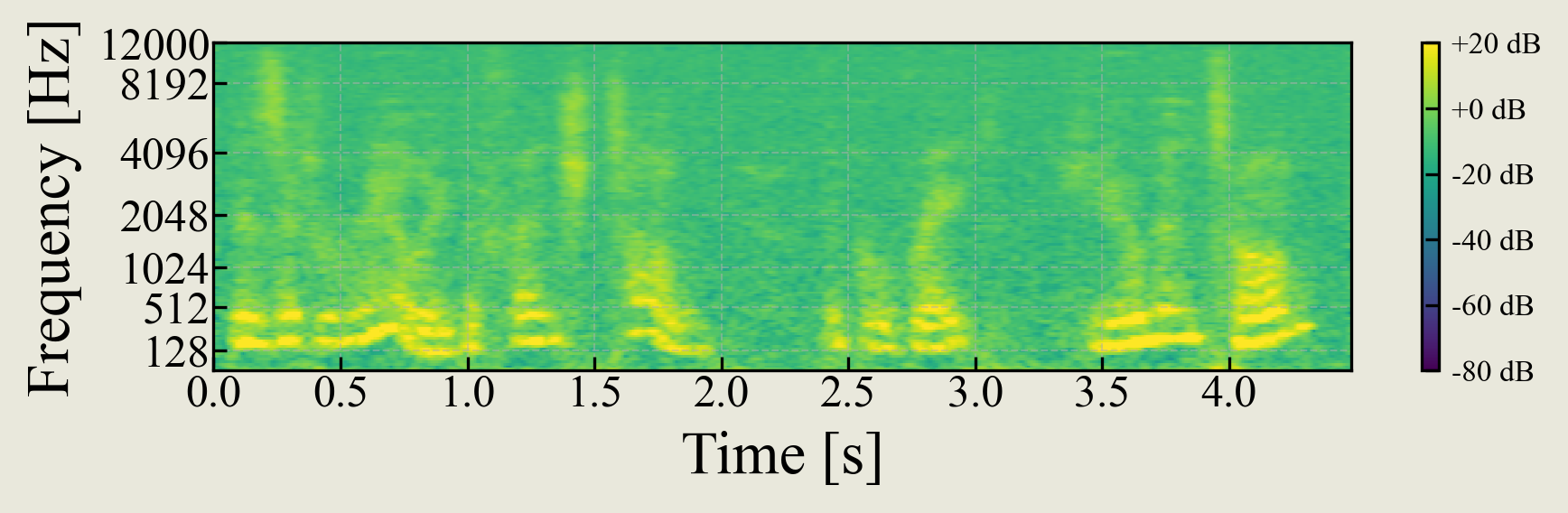

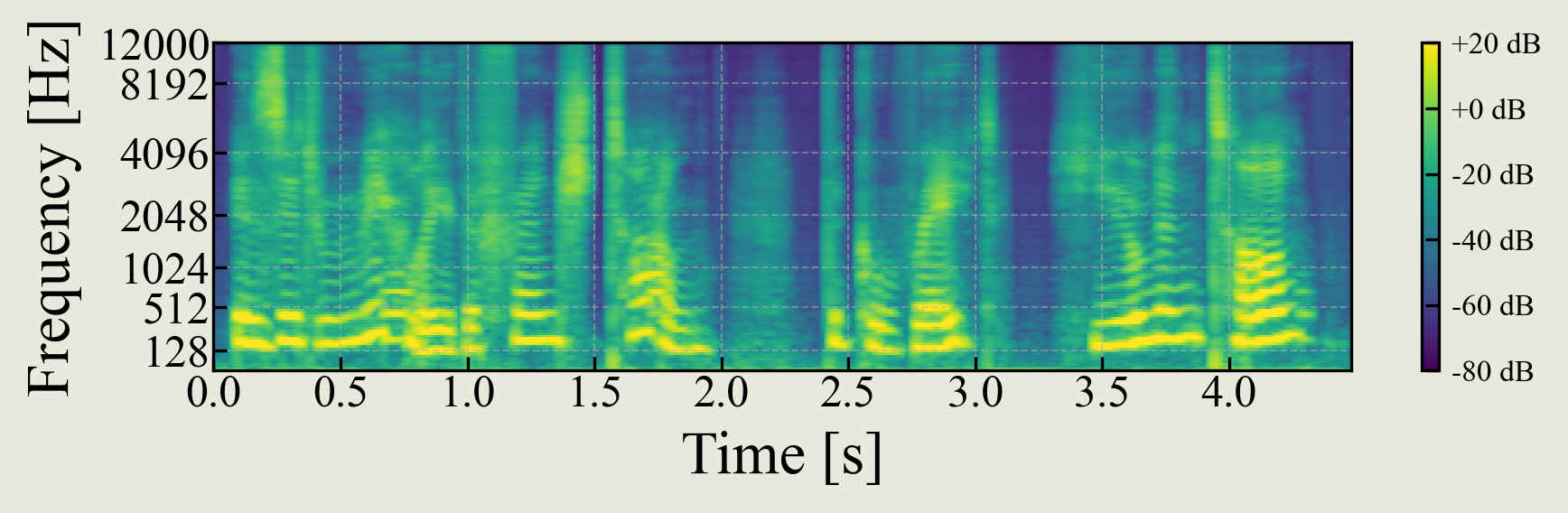

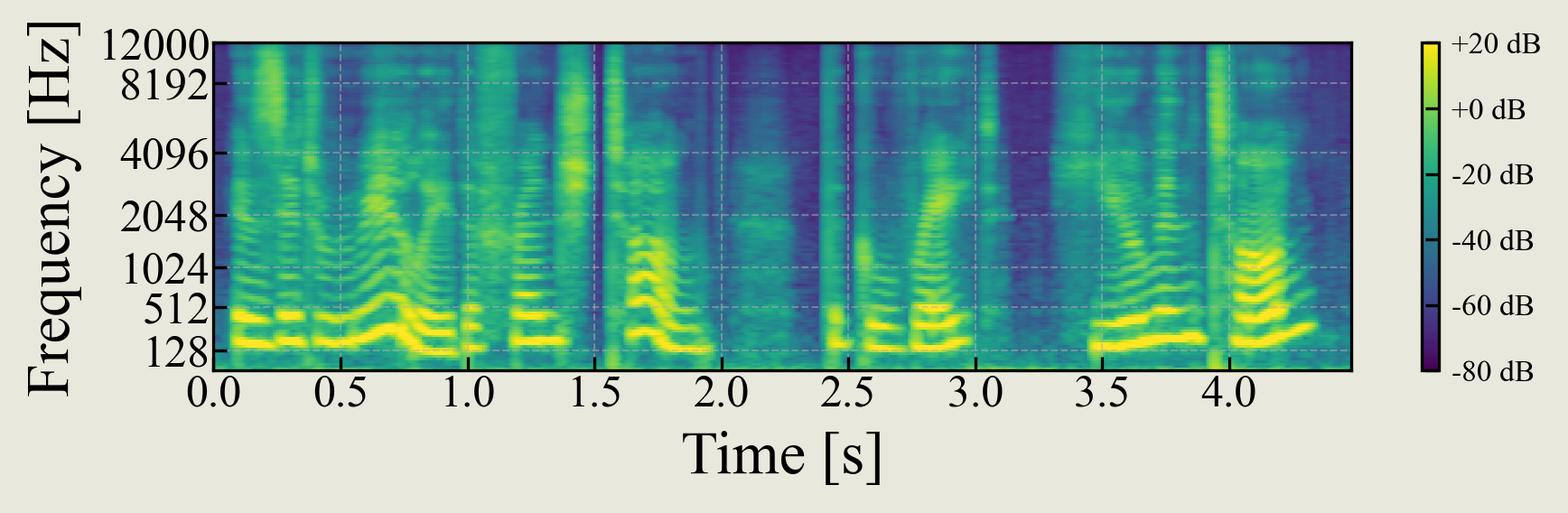

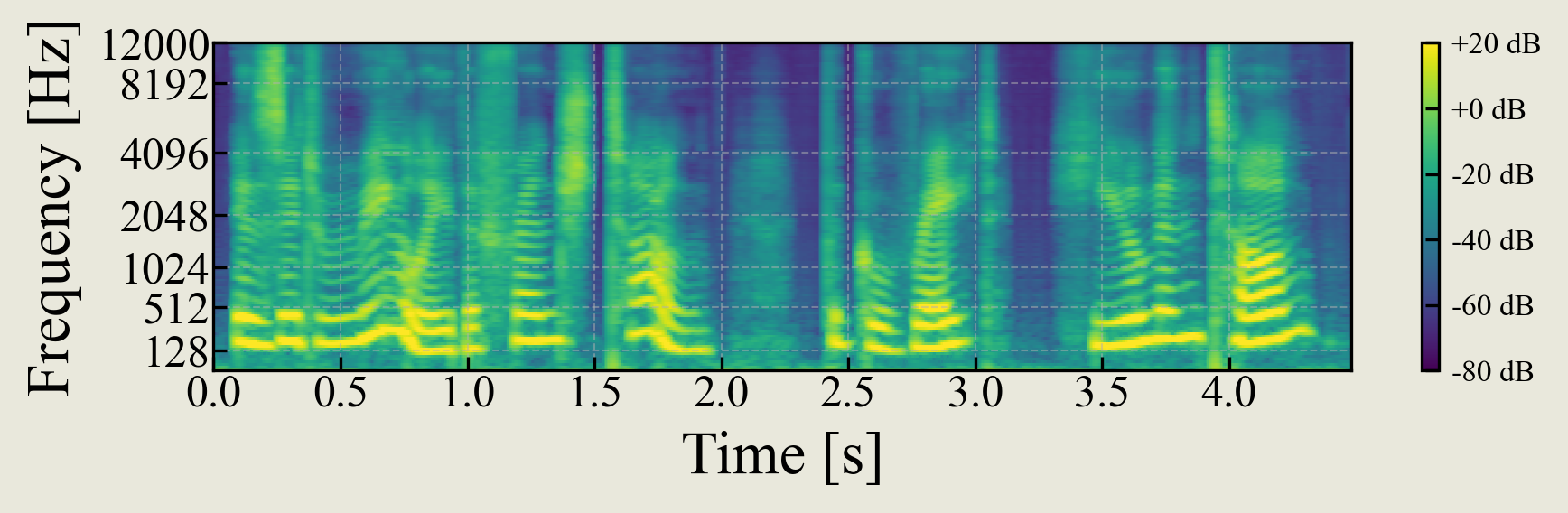

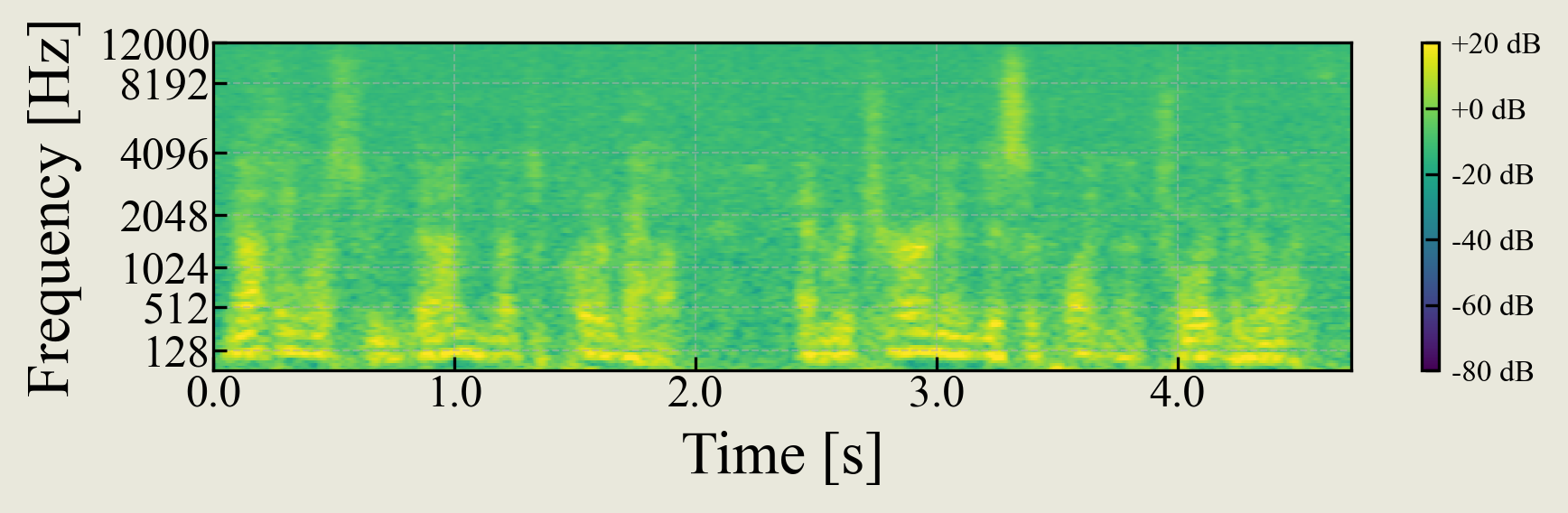

Compared to baselines

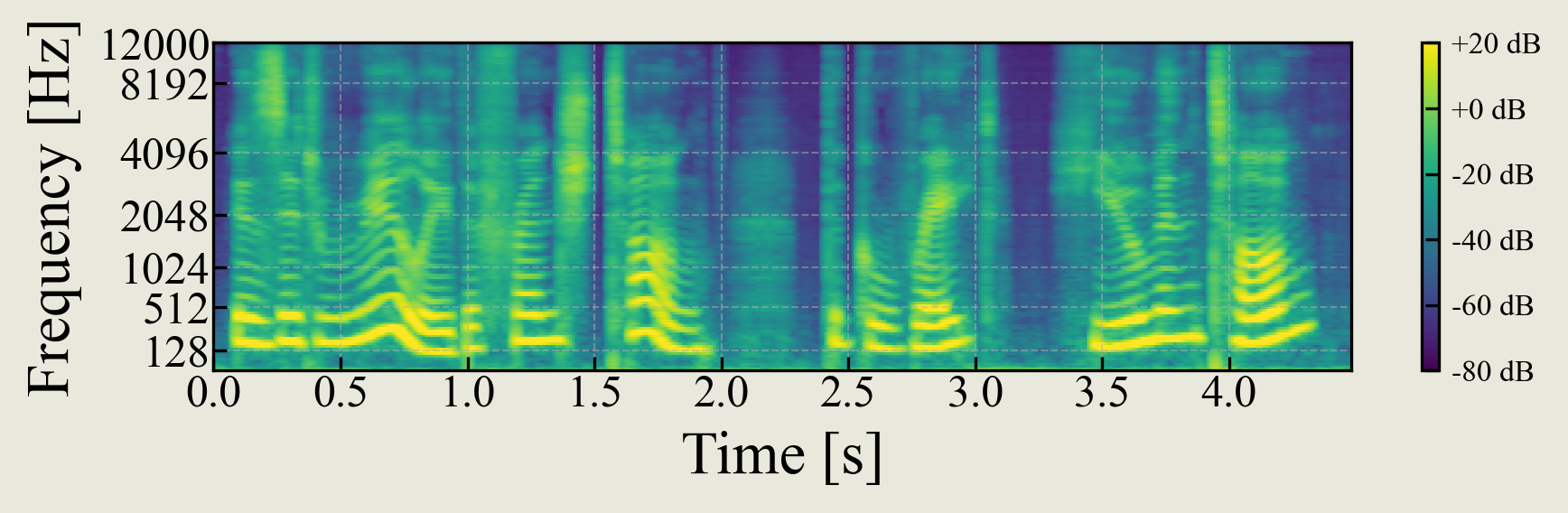

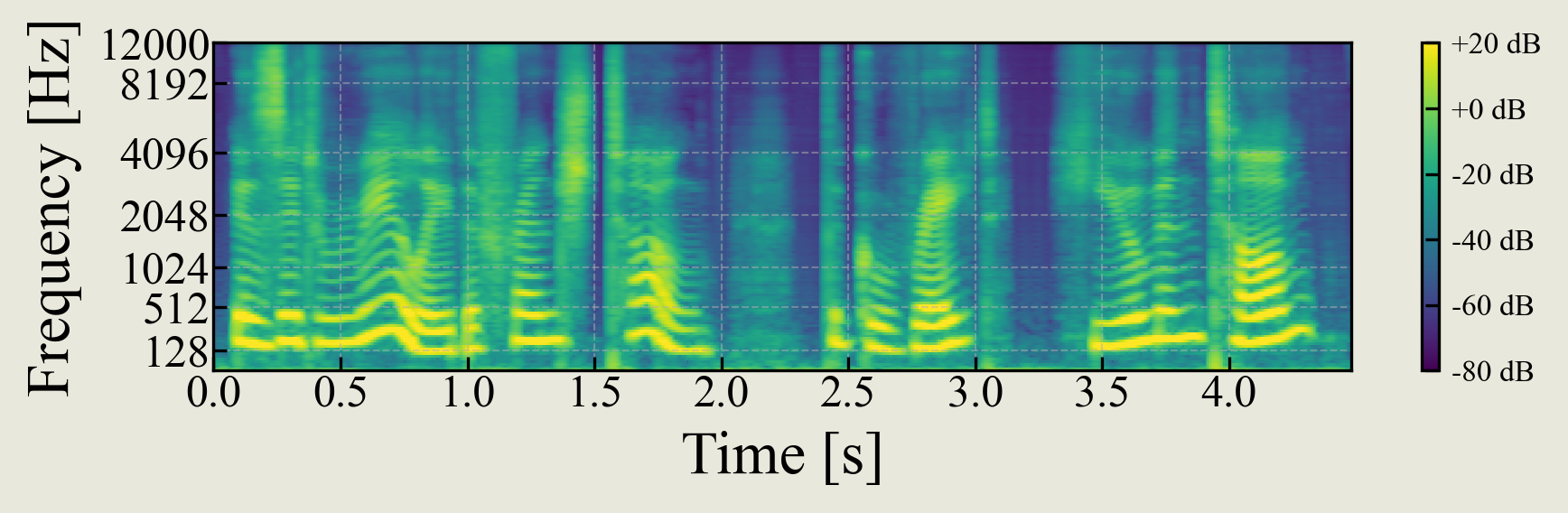

(middle-pitch, male)

"My eyes fail under the dazzling light, my ears are stunned with the incessant crash of thunder."

(high-pitch, female)

"I'll have 'Sizzle make a fine yard for the goat, where he'll have plenty of blue grass to eat."

(low-pitch, male)

"But in this vignette, copied from Turner, you have the two principles brought out perfectly."

(middle-pitch, female)

"But you mean to say you can't even advise her?"

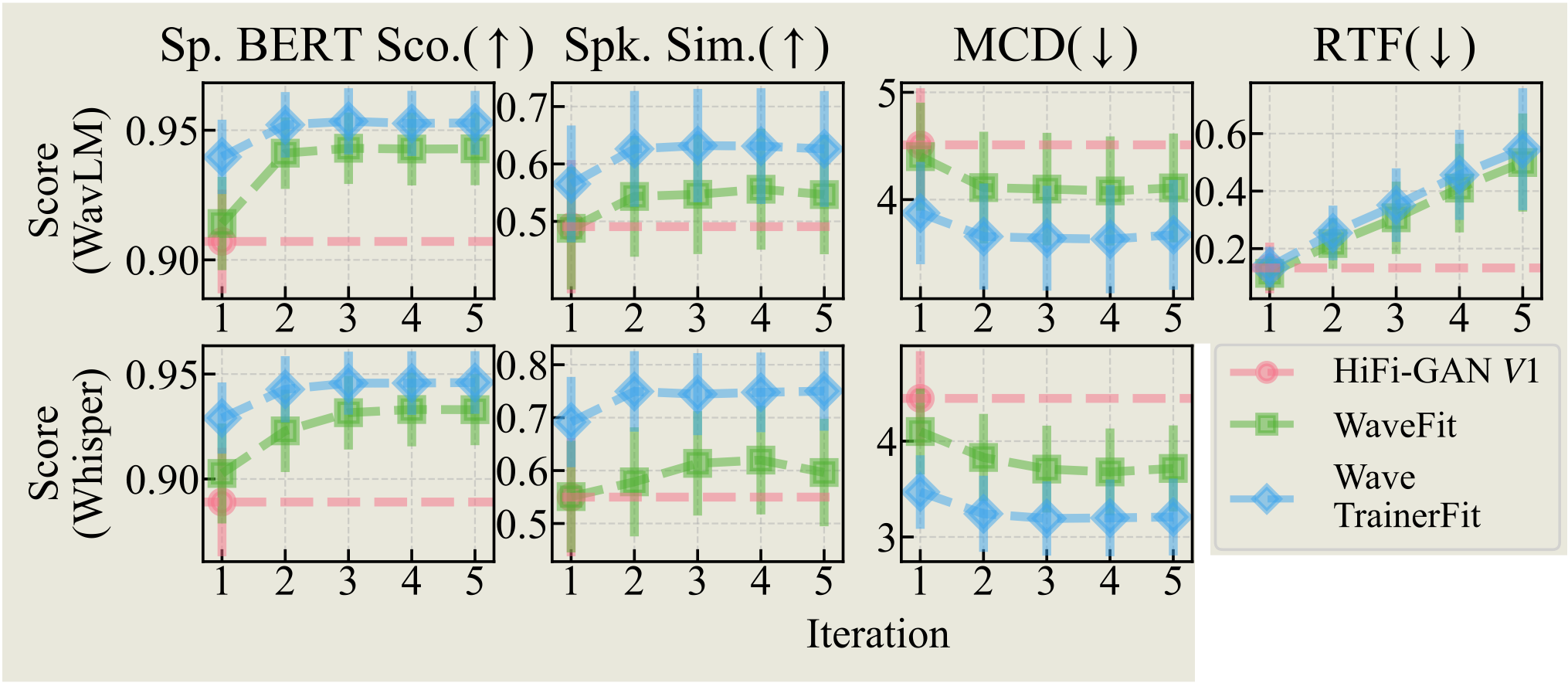

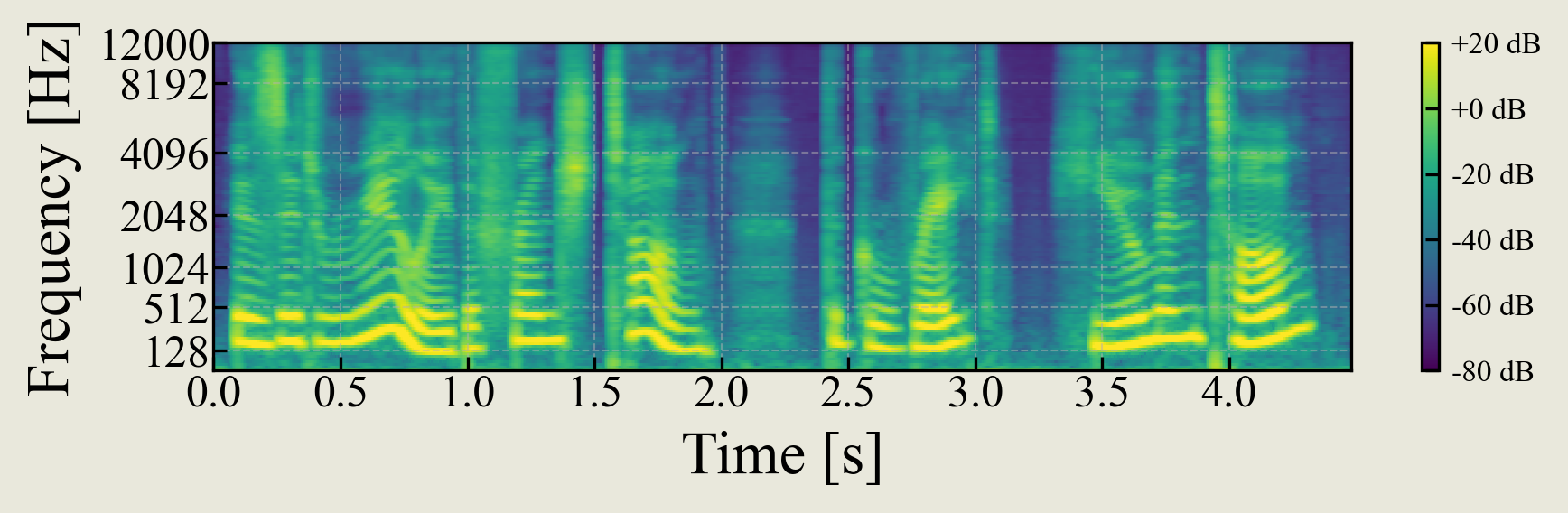

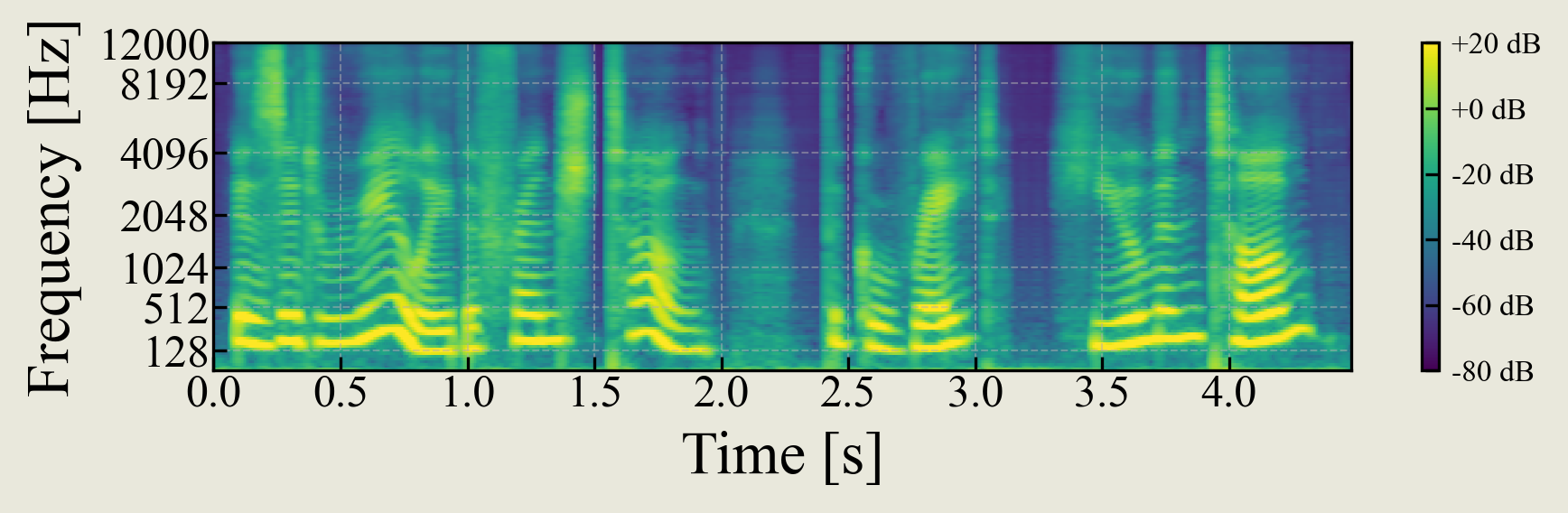

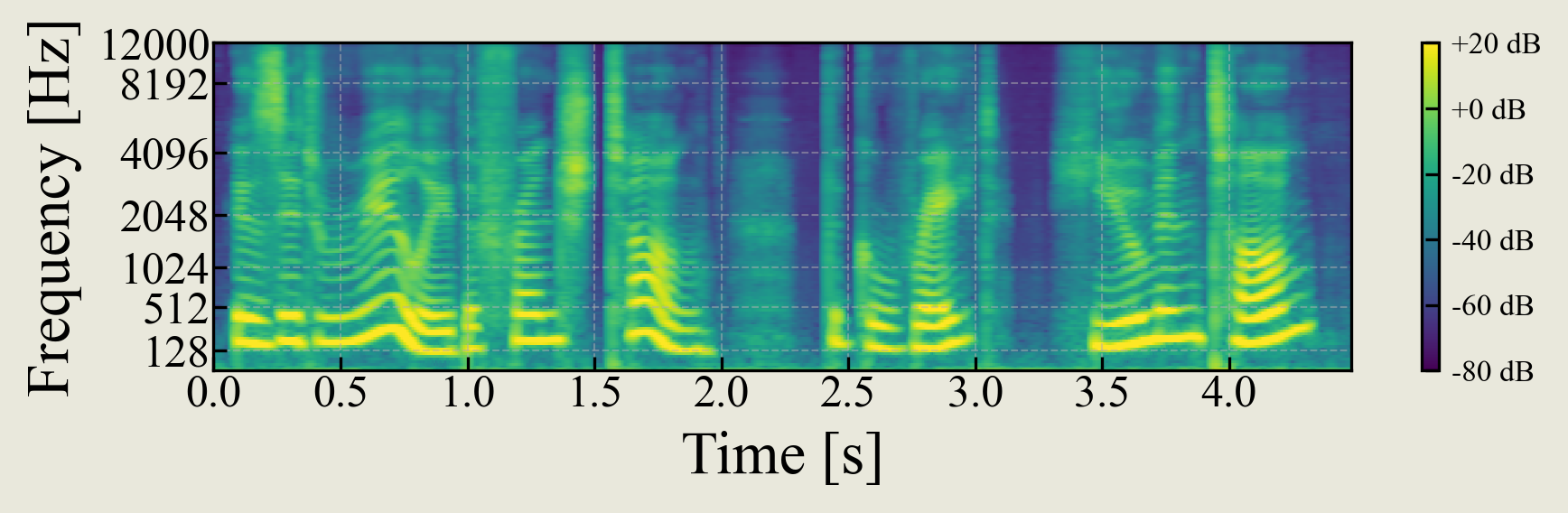

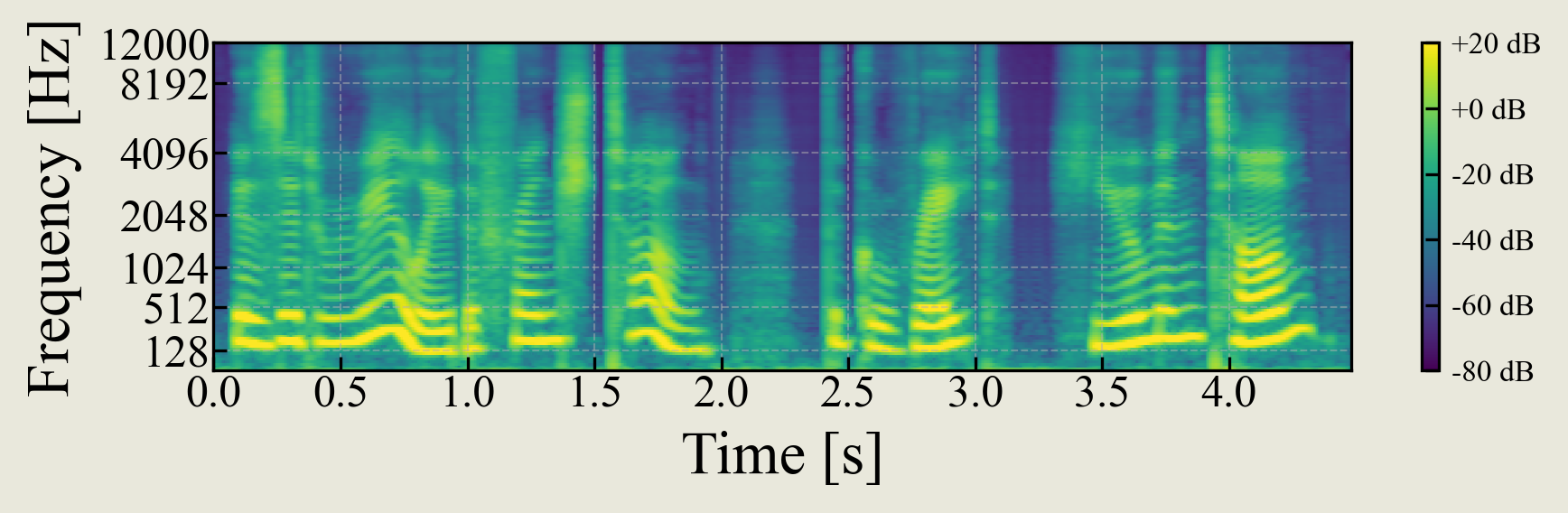

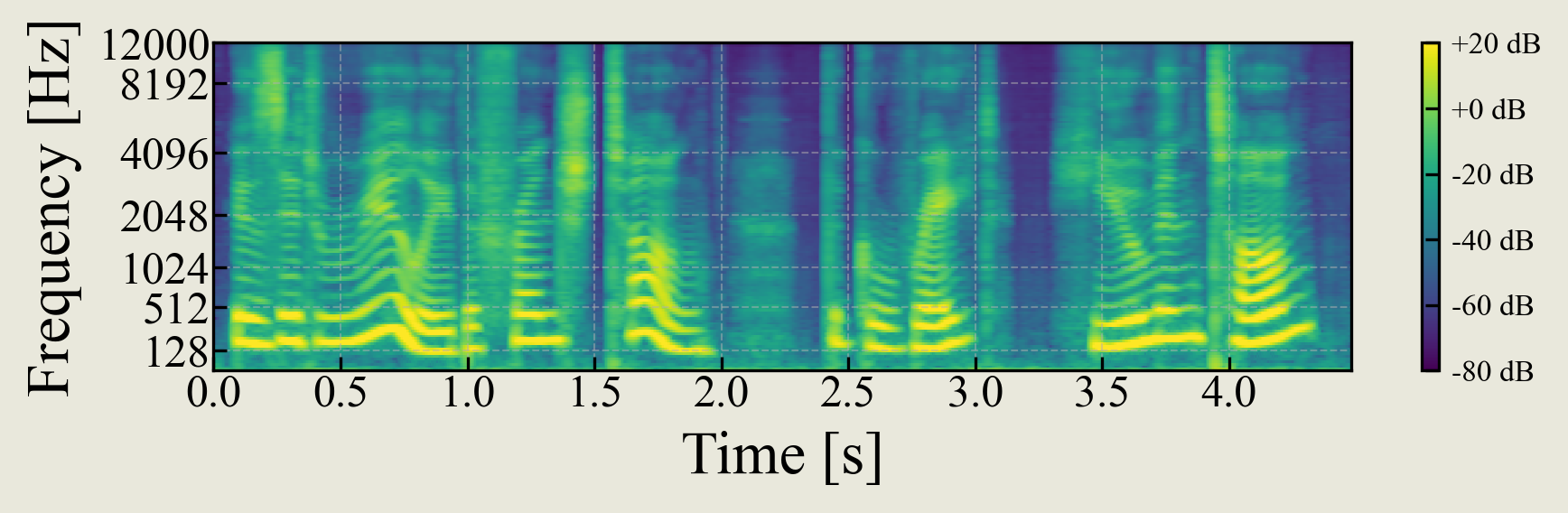

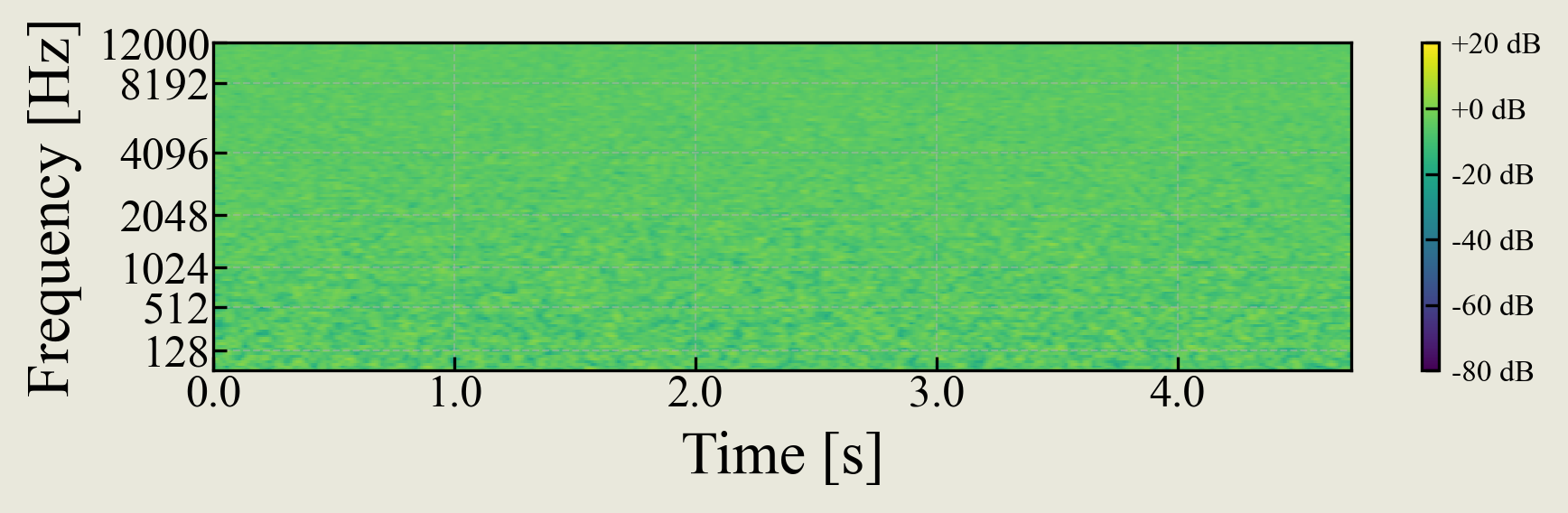

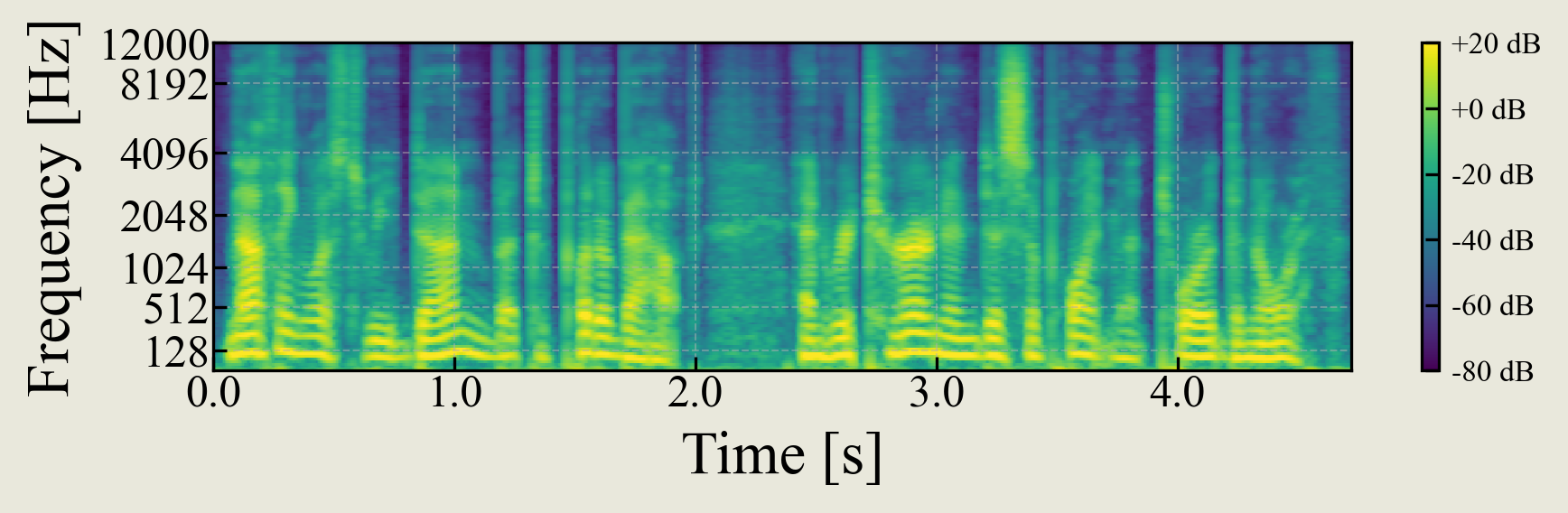

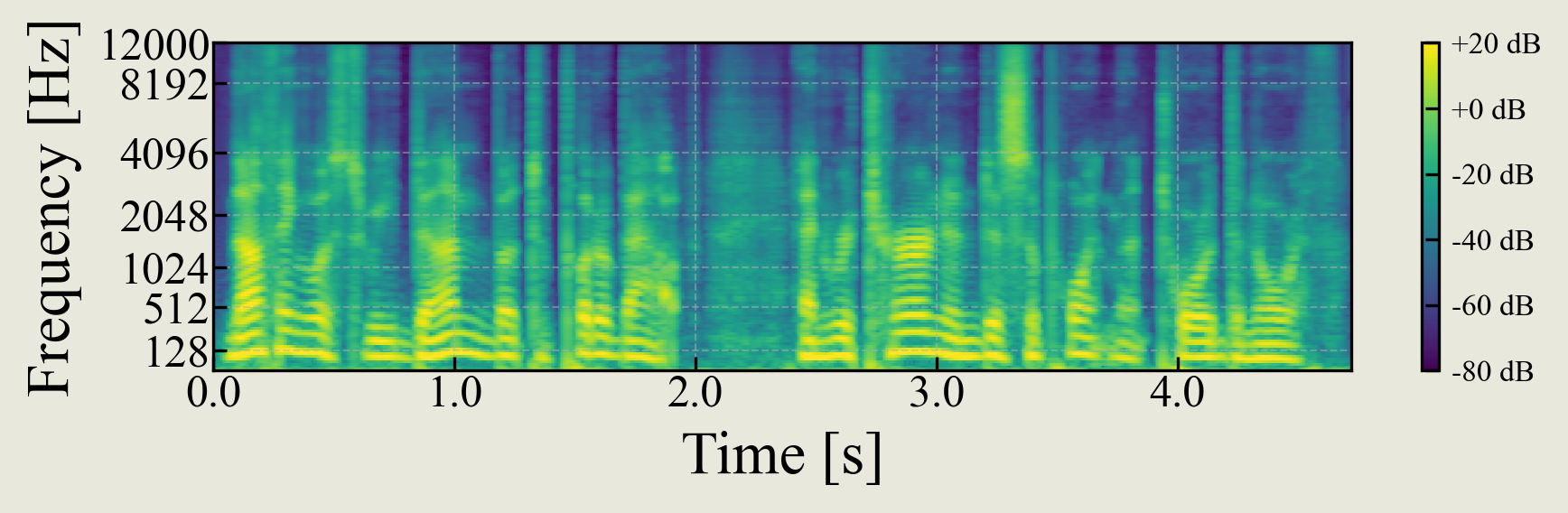

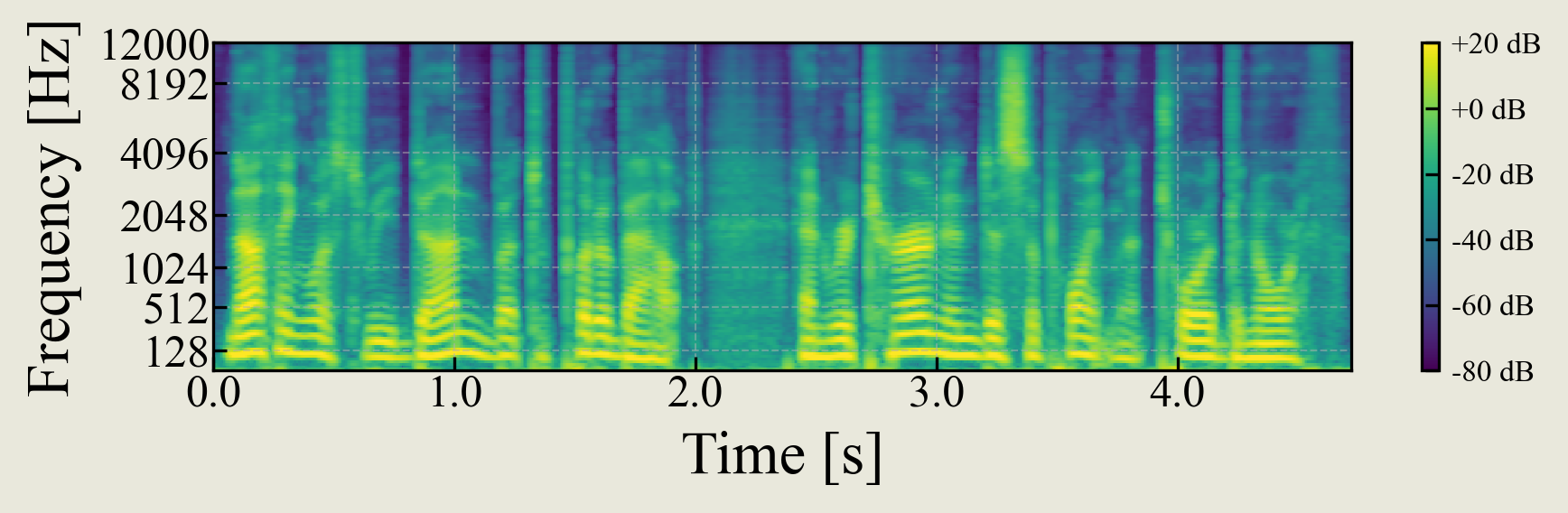

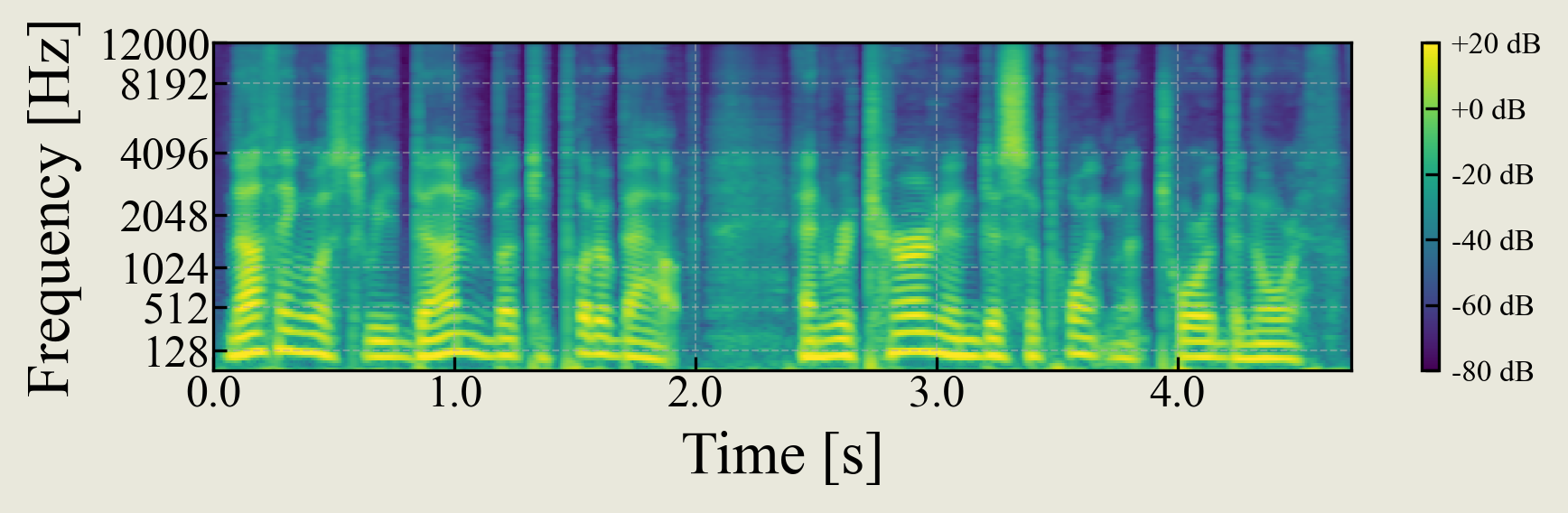

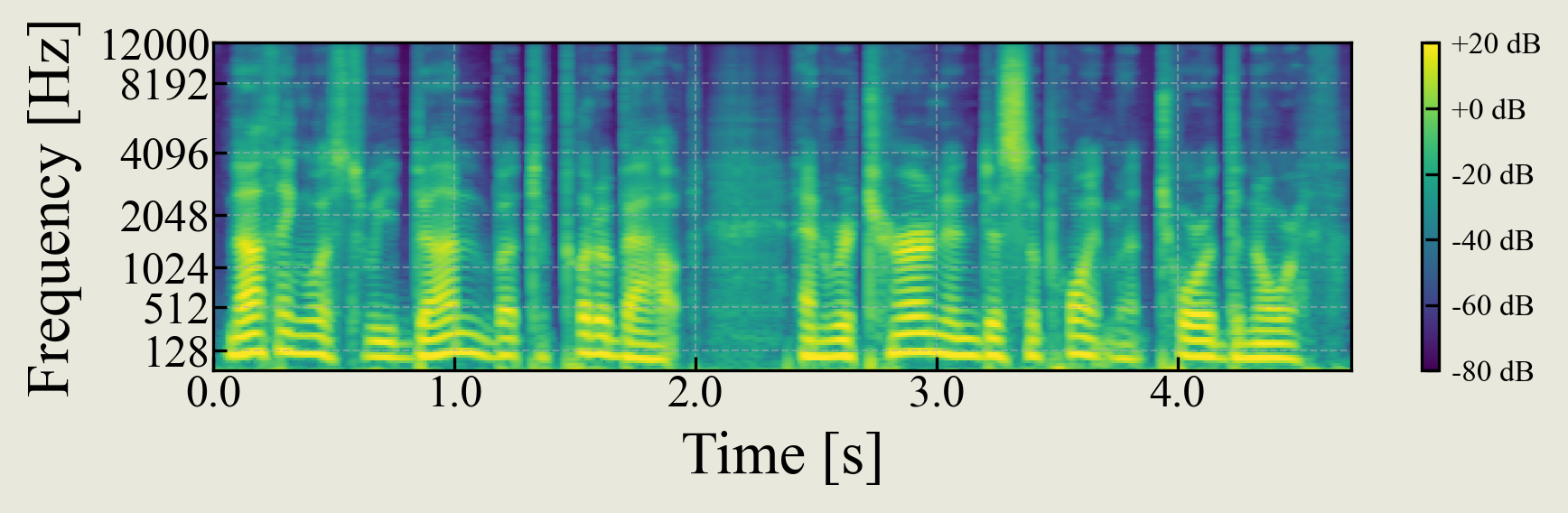

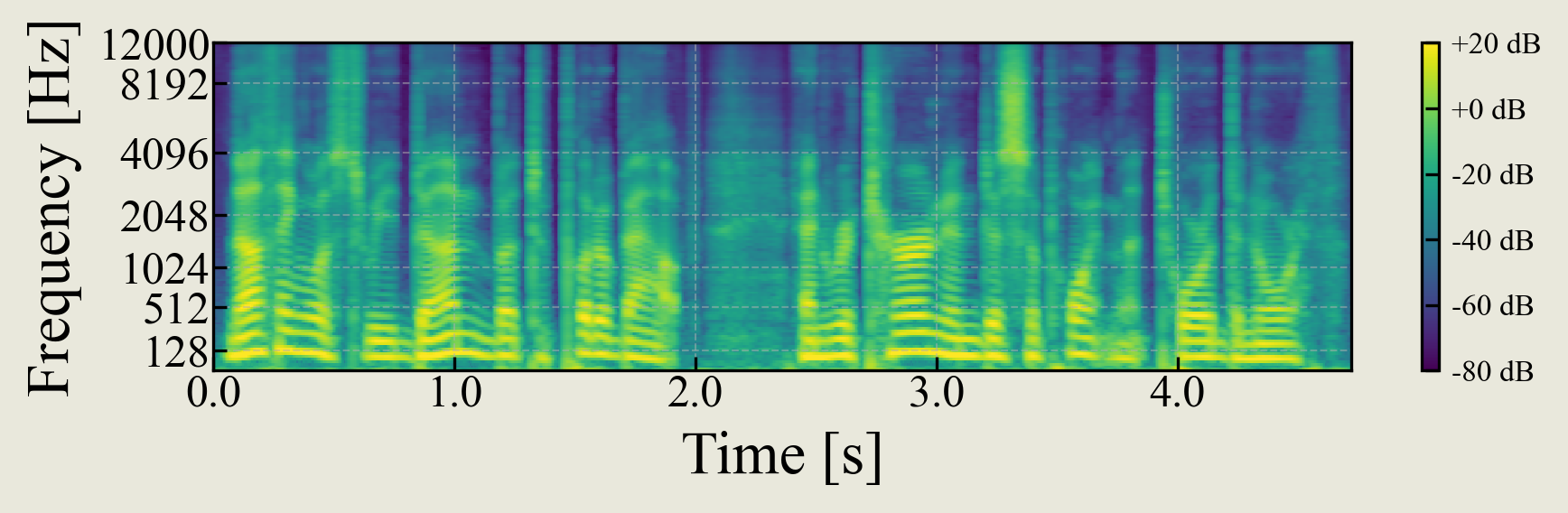

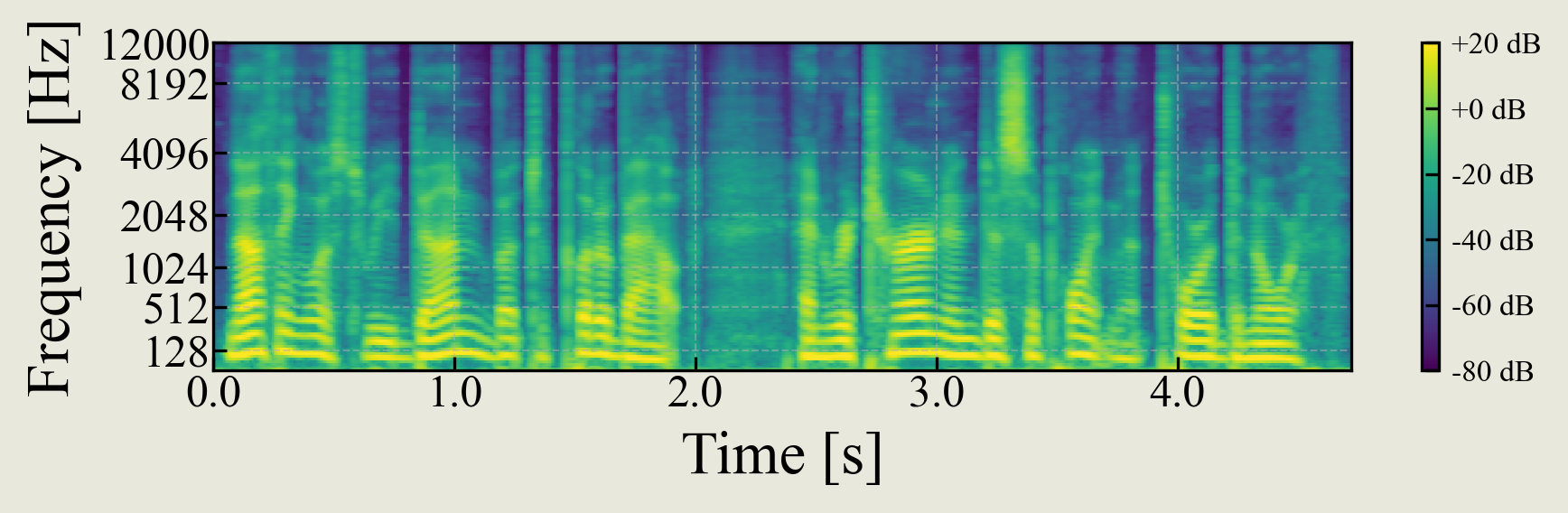

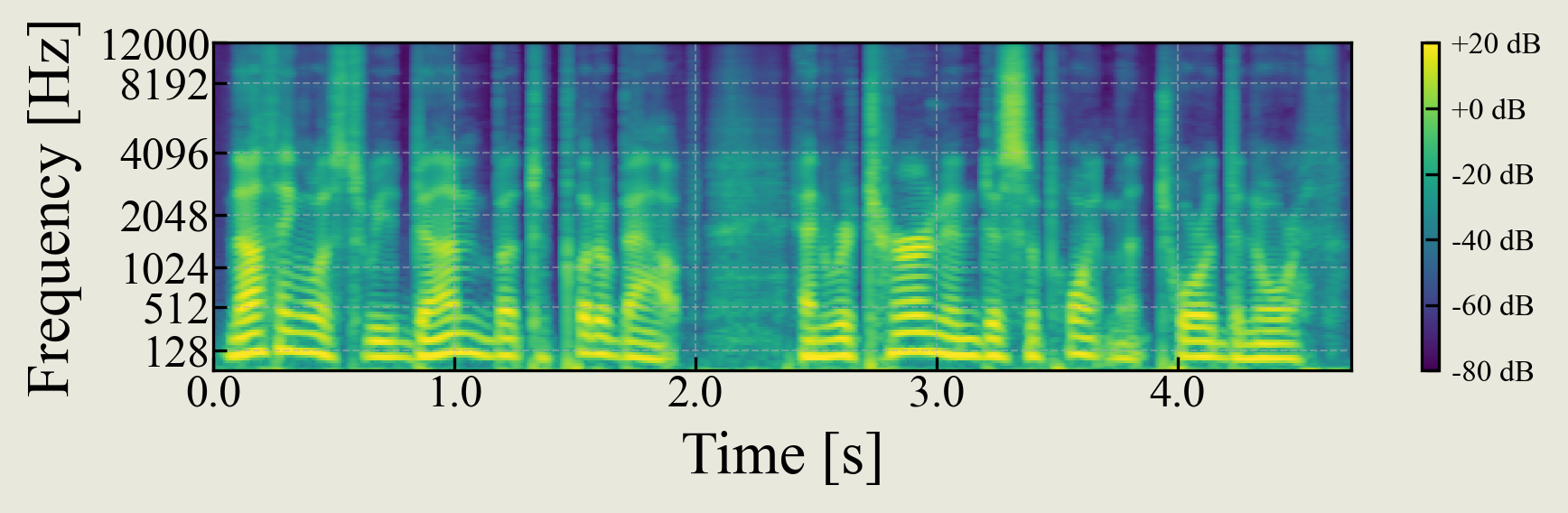

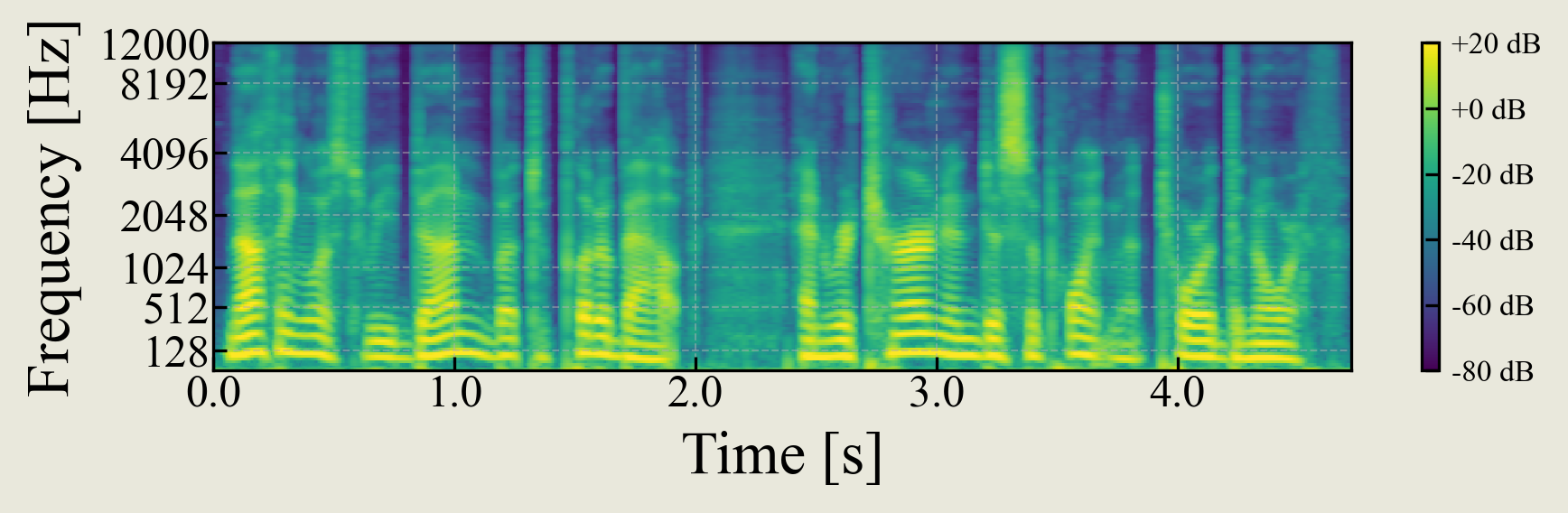

Impact of intermediate outputs

Objective evaluation results

Speech samples

"Is it really the French tongue, the great human tongue?"

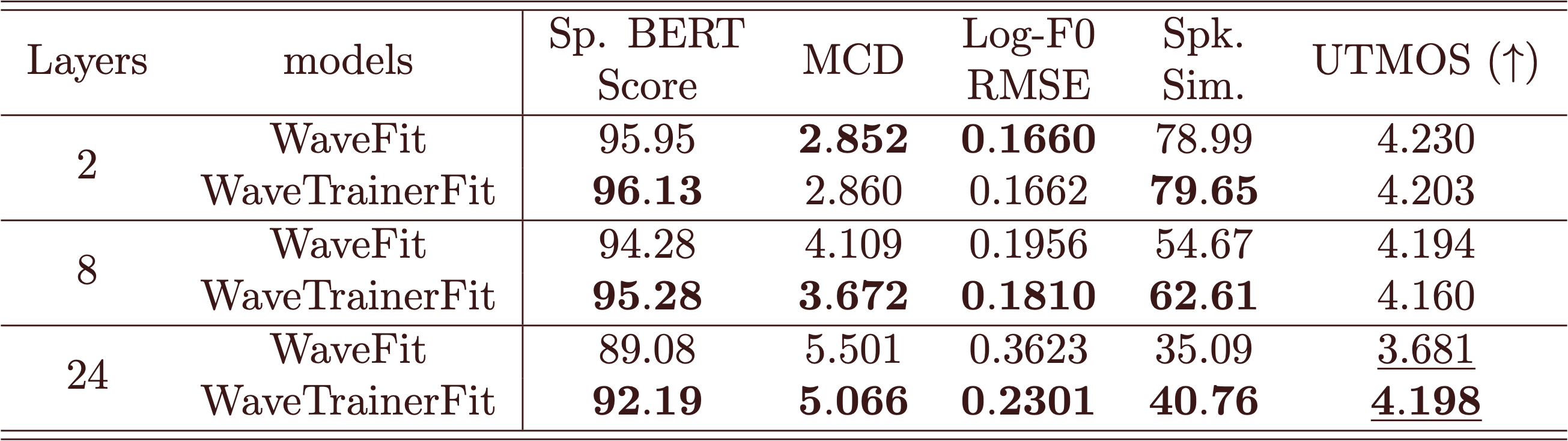

SSL layer-wise analysis

Generally, features from shallow layers are known to contain many acoustic features from the input samples, while features from deeper layers contain many semantic features from targets such as pseudo-labels. To verify whether the proposed method works robustly for features of various properties, we evaluated it on WavLM layers 2 and 24.

Objective evaluation results

Speech samples

References

[1] Y. Koizumi, H. Zen, S. Karita et al., “Miipher: A robust speech restoration model integrating self-supervised speech and text representations,” in Proc. of IEEE WASPAA, 2023, pp. 1–5.

[2] T. Saeki, G. Wang, N. Morioka et al., “Extending multilingual speech synthesis to 100+ languages without transcribed data,” in Proc. of IEEE ICASSP, 2024, pp.11,546–11,550.

[3] Y. Koizumi, K. Yatabe, H. Zen et al., “WaveFit: an iterative and non-autoregressive neural vocoder based on fixed-point iteration,” in Proc. of IEEE SLT, 2022, pp.884–891.

[4] Y. Koizumi, H. Zen, K. Yatabe et al., “SpecGrad: Diffusion probabilistic model based neural vocoder with adaptive noise spectral shaping,” in Proc. of Interspeech, 2022, pp. 803–807.

[5] C. H. Lee, C. Yang, J. Cho et al., “RestoreGrad: Signal restoration using conditional denoising diffusion models with jointly learned prior,” in Proc. of ICML, 2025.

[6] Y. Koizumi, H. Zen, S. Karita et al., “Libritts-r: A restored multi-speaker text-to-speech corpus,” in Proc. of Interspeech, 2023, pp. 5496–5500.

[7] S. Chen, C. Wang, Z. Chen et al., “WavLM: Largescale self-supervised pre-training for full stack speech processing,” IEEE J. Sel. Top. Signal Process., vol. 16, no. 6, pp. 1505–1518, 2022.

[8] A. Babu, C. Wang, A. Tjandra et al., “XLS-R: selfsupervised cross-lingual speech representation learning at scale,” in Proc. of Interspeech, 2022, pp. 2278–2282.

[9] A. Radford, J. W. Kim, T. Xu et al., “Robust speech recognition via large-scale weak supervision,” in Proc. of ICML, vol. 202, 2023, pp. 28,492–28,518.